Linear Regression

Linear Regression and Gradient Decent method to learn model of Linear Regression.

Linear Regression

Linear

regression: Machine Learning Algorithm

Variables

in Linear regression

Independent

variable

Ø If

X is input numerical variable then X is called the independent variable or

predictor.It is input of the model. All Features /Co-variant are independent

variable

Dependent

Variable

Ø If

Y is output numerical variable. Y is also called the dependent variable or

response variable. It Output of a model

Machine learning models are built to derive

the relationship between the dependent variable and independent variable.

It predicts a continuous dependent variable

based on values of the independent variable in case of Linear Regression

Ø Linear

regression is Supervised Learning. It predicts Relationship between dependent

and an independent variable which is linear

Ø e.g

Income * Expenditure, Chocolate * Cost,

CGPA * placement package etc.

Ø The

output is a function to predict the dependent variable on the basis of the

values of independent variables

Ø A

straight line is used to fit the data

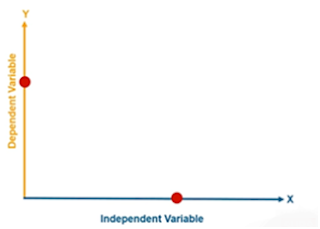

Dependent

and Independent Variable plot shows X-axis is an Independent variable and

Y-axis is a Dependent variable

Linear regression is a simple approach to supervised learning. In the table, AREA is the independent variable and cost of flat is the dependent variable

Linear Relation –In

a graph stress test is the independent variable and blood pressure is the

dependent variable. The graph shows their is a linear relationship between

dependent and Independent variable

For linear

regression linear correlation is required. What is correlation?

Correlation

▪X and Y can exist in three different types of relations

They can also exist in a weak relation

–

Correlation –

Ø Correlation

is a statistical technique that predicts whether and how strongly pairs of

variables are related.

Ø The

main result of a correlation is called the correlation coefficient (or

"r"). It ranges from -1.0 to +1.0. The closer r is to +1 or -1, the more

closely the two variables are related.

Ø If

r is close to 0, it means there is no relationship between the variables

Ø If

r is positive, it means that as one variable gets larger the other gets larger

Ø If

r is negative, it means that as one gets larger the other gets smaller (often

called an "inverse")

Correlation –

Equation of Linear Regression –

Let paired data points (x1,

y1), (x2, y2),

. . , (xn yn),

Y = β0

+ β1 * X

β0 ,

β1 are the coefficient

X

Independent variable

Y

Dependent variable

Types of Linear Regression –

Ø Linear

Regression

Y = β0 + β1 * X

Ø If

two or more explanatory variables have a linear relationship with the dependent

variable, the regression is called a Multiple Linear Regression

Y = β0 + β1 * X +β2 * X2+ β3 * X3+……+ βm * Xm

Example of Multiple Linear Regressions –

Ø Weather Forecasting

Ø Water demand of city (population, economy, water losses

and water restrictions)

Ø Healthcare (Malaria Prediction)

Gradient Decent –

Optimize the value of the coefficient iteratively. Minimize error of the model on the data

- Start with the random value of each coefficient

- The sum of squared error is calculated for each pair of input and output values

- The learning rate is used as a scaler factor

- Coefficients are in the direction updated towards minimizing error

- The process is repeated until minimum squared error is achieved and further improvement

Summary –

Ø To predict a continuous

dependent variable based on the value of the independent variable

Ø Dependent variable is always

continuous

Ø Least square

Ø Y= β0+ β1 * X --Straight line:

Best fit curve

Ø Linear relation between I and D

Ø Predicted output

Ø Business prediction

Solved Example 1: (Least Squares Method)

Lease

Squares Method

Let us consider an example where the 5 weeks of sales data is given below table:

|

X Week |

Y Sales (In Thousands |

|

1 |

1.2 |

|

2 |

1.8 |

|

3 |

2.6 |

|

4 |

3.2 |

|

5 |

3.8 |

Apply Linear Regression Technique to predict

the 7 th and 12 th week Sales.

Solution:

The equation for Linear Regression in

Y = β0 + β1 * X-----------------(1)

Where x is the independent variable, Y is the dependent variable.

For predicting the value we

need to find the optimized value of β0 and β1.

The least square method is used

as follows to find the values for β0 and β1 as follows

Where x¯ is the mean of x and y¯ is the mean of y

Let us calculate these

values

|

X Week |

Y Sales (In Thousands |

x^2 |

xy |

|

1 |

1.2 |

1 |

1.2 |

|

2 |

1.8 |

4 |

3.6 |

|

3 |

2.6 |

9 |

7.8 |

|

4 |

3.2 |

16 |

12.8 |

|

5 |

3.8 |

25 |

19 |

|

Sum=15 |

12.6 |

55 |

44.4 |

|

3 |

2.52 |

11 |

8.88 |

|

|

y¯ |

x^2¯ |

xy¯ |

Let us put these values in equation (2)

The Regression line equation

will be

y=0.54

+0.66 x

Let us use this equation to predict the sales

in weeks 7 and 12

i)

x=7

y= 0.54 +0.66 * 7

y=5.16 (In Thousands)

ii)

x=12

y= 0.54 +0.66 * 12

y=8.46 (In Thousands)

Solved Example 2: (Gradient Descent Method)

Gradient Descent is an optimization algorithm to find the values of the coefficients of the variables so as to minimize the error i.e. cost function

Gradient Descent is known as one of the most commonly used optimization algorithms to train machine learning models by means of minimizing errors between actual and expected results. The followings are the steps:

- Step 1: initialize the parameters of the model β0 and β1randomly

- Step 2: Compute the gradient of the cost function (MSE/SSE) with respect to each parameter. It involves making partial differentiation of cost function with respect to the parameters.

- Step 3: Update the model's parameters by taking steps in the opposite direction of the model. Here we choose a hyperparameter learning rate which is denoted by alpha (α). It helps in deciding the step size of the gradient.

- Step 4: Repeat steps 2 and 3 iteratively to get the best parameter for the defined model

Comments

Post a Comment