Performance Metrics for Regression model

Performance Metrics for the Regression model

In a

machine learning Algorithm, once the model is built, the next step is to use of

various performance criteria to evaluate Machine learning Models.

Various

performance Metrics/Criteria used for regression models and Classification models

are different and are listed below.

Regression Model: In

Regression analysis output is a continuous value, therefore for Regression

performance following methods are used

- Mean squared error (MSE)

- Mean average error(MAE)

- Root mean squared error (RMSE)

- R Square

Classification Model: In the

classification model output is Discret form and for classification performance

following methods are used

- Confusion matrix

- Accuracy

- Precision

- Recall (sensitivity)

- Specificity

- ROC curve (AUC) ROC Area Under

Curve is useful when we are not concerned about whether the

small dataset/class of dataset is positive or not, in contrast to the F1 score

where the class being positive is important.

- F-score(F1 score is useful when the size of

the positive class is relatively small)

Performance

metrics should be chosen based on the problem domain, project goals, and

objectives.

1.

Mean Squared Error:

MSE or Mean Squared Error is one of the

most preferred metrics for regression tasks. It is simply the average of the

squared difference between the target value and the value predicted by the

regression model. As it squares the differences, it penalizes even a small

error which leads to over-estimation of how bad the model is. It is preferred

more than other metrics because it is differentiable and hence can be optimized

better.

Here n is the number of samples, y- is the

target value and Ŷ cap is the predicted valure

- Mean

Average Error (MAE)

MAE is

the absolute difference between the target value and the value predicted by the

model. The MAE is more robust to outliers and does not penalize the errors as

extremely as mse. MAE is a linear score which means all the individual

differences are weighted equally. It is not suitable for applications where you

want to pay more attention to the outliers.

3.

Root Mean Squared Error:

RMSE

is the most widely used metric for regression tasks and is the square root of

the averaged squared difference between the target value and the value

predicted by the model. It is preferred more in some cases because the errors

are first squared before averaging which poses a high penalty on large errors.

This implies that RMSE is useful when large errors are undesired.

![]()

4.

R Square:

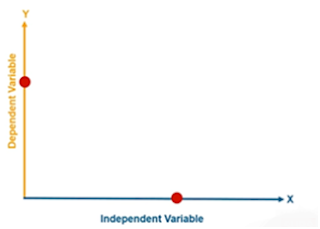

The coefficient of Determination or R² is another metric used for evaluating the performance of

a regression model. The metric helps us to compare our current model with a

constant baseline and tells us how our model is better. The constant

baseline is chosen by taking the mean of the data and drawing a line at the

mean. R² is a scale-free score that implies it doesn't matter whether the

values are too large or too small, the R² will always be less than or equal to

1.

The

Mean Squared Error (MSE) of the baseline model is calculated by taking the

average of the squared differences between the actual values and the mean of

the actual values.

The

baseline model assumes that every prediction is simply the mean of the observed

data, so the MSE of the baseline model is given by:

·

yi is the actual value of the dependent variable.

·

yˉ

is the mean of the actual values.

·

n is the number of observations.

This

value represents the average squared difference between the actual values and

the mean of the actual values.

The model's Mean Squared Error (MSE) can be calculated by taking the average

of the squared differences between the actual values and the predicted values

from the regression model.

·

yi is the actual value.

·

y^i is the predicted value from the model.

·

n is the number of observations.

This

indicates that the model's predictions are very close to the actual values,

reflecting the model's high accuracy.

Comments

Post a Comment